УДК 004.032.26

IMPLEMENTATION AND USE OF NEURAL NETWORKS IN DAILY LIFE

Student Kotikov Daniil Sergeevich,

Academic Advisor: PhD in Technical Sciences, Associate Professor

Zaitseva Tatyana Valentinovna,

Belgorod State National Research University,

Russia, Belgorod

Abstract. This article discusses the principles of building and training neural networks, as well as the operation algorithms and key features of the most popular and promising neural networks currently used by people.

Key words: neural network, artificial intelligence, search systems, Google, Yandex, OpenAI.

ВНЕДРЕНИЕ И ИСПОЛЬЗОВАНИЕ НЕЙРОННЫХ СЕТЕЙ В ПОВСЕДНЕВНОЙ ЖИЗНИ

студент Котиков Даниил Сергеевич,

науч. руководитель: канд. техн. наук, доцент

Зайцева Татьяна Валентиновна,

Белгородский государственный национальный исследовательский университет, г. Белгород, Российская Федерация

Аннотация. В данной статье рассматриваются принципы построения и обучения нейронных сетей, а также алгоритмы работы и ключевые особенности наиболее популярных и многообещающих нейронные сети, используемых людьми на данный момент.

Ключевые слова: нейронная сеть, искусственный интеллект, поисковые системы, Google, Yandex, OpenAI.

Today, artificial intelligence is actively used in our lives and helps in solving many problems in a wide variety of fields. One of the most promising areas for the development of artificial intelligence is neural networks, which are a mathematical model built on the principle of organization and functioning of biological neural networks, that is, networks of nerve cells of a living organism. They are already widely used in marketing, security and entertainment, and a variety of other industries. The most advanced companies, such as, for example, Google, Yandex and Microsoft, are conducting research in this area, which also contributes to the development and emergence of new discoveries in this area.

As previously mentioned, the very principle of constructing artificial neural networks is extremely similar to the structure of biological ones, that is, biological principles were used with a number of certain assumptions. Artificial neural networks are made up of many interconnected simple processes and can be trained just like the human brain. The training of a neural network is understood as the process of setting up its architecture (the structure of neural connections) and the weights of synaptic connections to effectively solve a specific problem. As a rule, the learning process of a neural network is carried out on some data sample, which is a training example. It is also important to note that neural network learning algorithms are divided into two types: supervised and unsupervised. The following is a brief description of each of the algorithms:

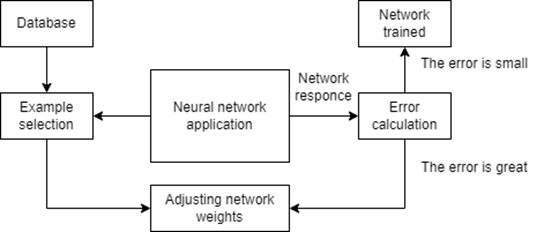

1. The process of training a neural network with a teacher consists in presenting a network of a sample of training examples, and each of the examples is fed to the inputs of the network, is processed within its structure, then the output signal of the network is calculated, compared with the corresponding values of the target vectors, which are the required output values. Then the error is calculated and the weight coefficients of connections within the neural network change, this process also depends on the chosen algorithm. This process is carried out with each of the vectors until the minimum acceptable result is reached (Figure 1).

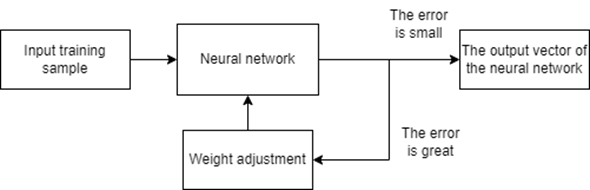

2. In unsupervised learning, the learning set consists only of input vectors. The algorithm changes the weight coefficients so that when sufficiently close input vectors are presented, the same outputs are obtained. In the learning process, similar vectors are combined according to statistical properties and form classes, so the presentation of a vector from this class to the input gives a certain output vector at the output (Figure 2).

Figure 1. Scheme of the learning process of a neural network with a teacher.

Figure 2. Scheme of the learning process of a neural network without a teacher.

Turning to examples of neural networks that are most popular, it is important to note ChatGPT, a chat bot developed by OpenAI that can work in a conversational mode. Its advantage lies in the ability to both solve many tasks of the user's daily life, such as, for example, writing an essay for a schoolboy, and creating code for a programmer. The latter becomes especially relevant, since many IT professionals’ resort to its help, connecting to their code the ability to access this neural network using a bot.

Next, we will consider significant algorithms and methods in the operation of a neural network using ChatGPT as an example. A distinctive feature is ease of use: the neural network does not require a specific question, but easily understands natural language when receiving a task. This is due to the work of the Prompt Engineering method. The generation of text in response to a question occurs through a technique called autoregression, which involves predicting the most likely next word in a sequence based on previous words.

Also, an important attribute of the ChatGPT neural network is its transformer architecture, which was developed by Google Research and Google Brain. Unlike the recurrent neural network architecture, which performs calculations sequentially, the transformer performs them in parallel, which significantly reduces the task execution time. In addition, an important advantage is long-term memory, which was achieved using a new approach to remembering the previous text, so that we get a contextually sustained text as an output. Transformer is a neural network architecture with a complex structure, without which the functioning of modern neural networks using various media resources is indispensable.

Machine learning does a better job with numbers than with text, so we need a tokenization procedure - converting text into a sequence of numbers.

The easiest way to do this is to assign each unique word its own number - a token, and then replace all the words in the text with these numbers. But there is a problem: there are a lot of words and their forms (millions) and therefore the dictionary of such words - numbers will turn out to be too large, and this will make it difficult to train the model. You can split the text not into words, but into individual letters (char-level tokenization), then there will be only a few dozen tokens in the dictionary, but in this case the text itself after tokenization will be too long, and this also makes learning difficult.

Not so long ago, the programmer's toolkit, whose algorithms simplify the creation of neural networks for recognizing various objects, included the Yolo convolutional neural network. Its algorithms allow you to quickly process the received image, due to the fact that this neural network does not need to repeatedly view the image, as happens with other CNNs, the name speaks for itself – “It's worth just looking at it.”

Working with an image in Yolo is carried out as follows: the resulting image is raised to a matrix consisting of image fragments, the next step is to raise objects into bounding boxes, after which the parameters of this image are calculated. The necessary parameters in this case are the coordinates of the frame (along the coordinate axis specified from the upper left edge) and its objectivity index, which serves as the center of the object, expresses the probability of successful detection of the object in the bounding box.

Thus, working with Yolo and its fast algorithm of work tell us that this tool is an excellent assistant in creating neural networks of computer vision, and that is why it has recently been widely used by programmers in projects where speed in object detection is required.

It is also important to touch upon the generative language neural network, an excellent example of which is YaLM (Yet another Language Model), developed by Yandex in 2021, it recognizes and determines the construction principle, taking into account knowledge about the world and the norms of the Russian and English languages, taking into account existing rules.

Like many other large language models from the world's leading companies (BERT, GPT, LaMDA), it is based on the Transformer architecture. Such a model has exactly one task - to generate each subsequent word in a sentence. To make the text coherent and grammatically correct, during training, the model evaluates each predicted word: for example, it decides whether the word “run” or the word “red” can go after “Roses are ...”.

The considered neural network can have from 1 to 100 billion parameters, it was tested on Yandex supercomputers and processed several terabytes of texts during the training process. YaLM is used in more than 20 projects by Yandex, which certainly makes it quite popular: the neural network helps Alice better communicate with the user, and Search generates cards for quick answers. You can also use YaLM to generate an advertisement or site description.

The above lists only the most popular and sought-after neural networks in their fields of activity. Thanks to neural networks, the annual volume of investments in the field of AI has grown 15 times since 2011, however, this is only the beginning, since the number of startups developing in this area is already in the tens of thousands and, according to analysts, there will be hundreds of them in a few years. develop into quite large-scale projects. The active development of neural networks brings improvement and relief in many areas and aspects of human life.

References:

1. Vadinsky, O. (2018) An overview of approaches evaluating intelligence of artificial systems. Acta informatica pragensia. 7-1, 74-103. URL: https://elibrary.ru/item.asp?id=35423152 (date accessed 16.04.2023)

2. Isakov, Yu. (2018) Artificial intelligence. ModernScience. 6-1, 25-27. URL: https://elibrary.ru/item.asp?id=35277490 (date accessed 16.04.2023)

3. Alesheva, L. N. (2018) Intelligent learning systems. Bulletin of the University. 1, 149-155.

4. Berdyshev, A. V. (2018) Artificial intelligence as a technological basis for the development of banks. Bulletin of the University. 5, 91-94.

5. Bogomolova, A. I. (2018) Artificial intelligence and expert systems in mobile medicine. Chronoeconomics. 3, 17-28. URL: https://elibrary.ru/item.asp?id=35353718 (date accessed 17.04.2023)

6. Marshalko, G. (2018) Artificial Mind Games: Safety of Machine Learning Systems. Information Security. 4, 6-7.

7. Moskvin V. A. (2018) Will artificial intelligence become smarter than a human. Investments. 7 (282), 29-40. URL: https://elibrary.ru/item.asp?id=35250314 (date accessed 19.04.2023)

Список литературы:

1. Vadinsky, O. An overview of approaches evaluating intelligence of artificial systems // Acta informatica pragensia. 2018. No. 7-1. pp. 74-103. – URL: https://elibrary.ru/item.asp?id=35423152 (дата обращения: 16.04.2023).

2. Isakov, Yu. Artificial intelligence // ModernScience. 2018. No. 6-1. pp. 25-27. – URL: https://elibrary.ru/item.asp?id=35277490 (дата обращения: 16.04.2023).

3. Алешева, Л. Н. Интеллектуальные обучающие системы / Л. Н. Алешева. – Текст: электронный // Вестник университета. – 2018. – № 1. - С. 149-155.

4. Бердышев, А. В. Искусственный интеллект как технологическая основа развития банков / А. В. Бердышев. – Текст: электронный // Вестник университета. – 2018. – № 5. – С. 91-94.

5. Богомолова, А. И. Искусственный интеллект и экспертные системы в мобильной медицине / А. И. Богомолов, В. П. Невежин, Г. А. Жданов. – Текст: электронный // Хроноэкономика. – 2018. – № 3. – С. 17-28. – URL: https://elibrary.ru/item.asp?id=35353718 (дата обращения: 17.04.2023)

6. Маршалко, Г. Игры искусственного разума: безопасность систем машинного обучения / Г. Маршалко. – Текст: электронный // Информационная безопасность. – 2018. – № 4. – С. 6-7.

7. Москвин В. А. Станет ли искусственный интеллект умнее человека / В. А. Москвин. – Текст: электронный // Инвестиции. – 2018. – № 7 (282). – С. 29-40 – URL: https://elibrary.ru/item.asp?id=35250314 (дата обращения: 19.04.2023)

© Котиков Д. С., Пискун Д. В., 2023

Скачано с www.znanio.ru

Материалы на данной страницы взяты из открытых источников либо размещены пользователем в соответствии с договором-офертой сайта. Вы можете сообщить о нарушении.